Google has just shared what parts of the web gave its indexing bots the most trouble during 2025, and the list shows some real pain points that site owners should know about. The insight came from the latest Search Off the Record podcast with Google’s Gary Illyes and Martin Splitt.

What Google Struggled With

Crawling is the process where Googlebot browses the internet to find new pages and understand site content, readying it for search results. In 2025, Google identified several patterns that made this harder than usual.

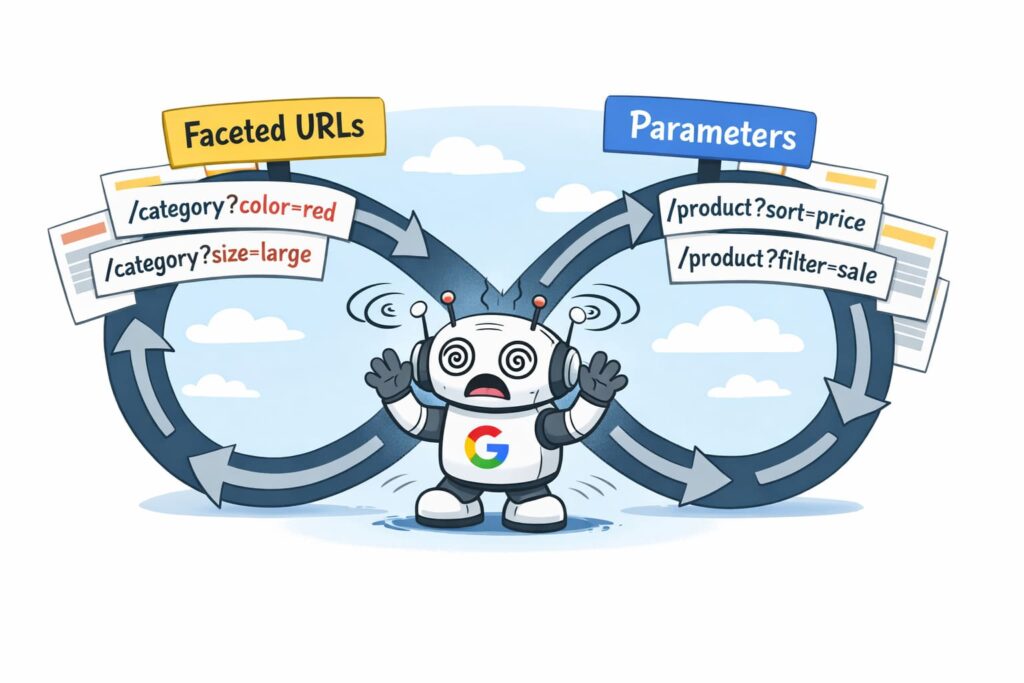

Faceted navigation was the biggest issue

Accounting for about half of the crawling problems last year. These are filter systems that let users sort products or listings in tons of different ways. On big sites, this can create millions of unique URLs that look different to Googlebot, but actually show mostly the same content. Having so many similar paths can trap bots and waste resources.

About one quarter of the trouble came from action parameters in URLs

These are little add-ons like ?add_to_cart=true that don’t change the main page content, but make Googlebot think they might be worth crawling separately. That quickly balloons the number of pages for a single product.

Irrelevant Parameters

Another 10 percent of the issues were caused by irrelevant parameters, such as session IDs and tracking tags, where Googlebot isn’t always sure if they alter the page’s content.

Smaller amounts of trouble came from things like plugins or widgets that dynamically add distractive bits to URLs, and some technical oddities like double-encoded URLs.

Why This Matters

When bots get stuck in loops of pages that look different but actually aren’t useful, it can slow down crawling and even put extra load on web servers. Google’s own team summed it up like this: once Googlebot finds these tangled URL forests, it has to crawl a lot of them before it can decide which ones matter.

That can slow how fast new content gets noticed and indexed, and in extreme cases, can make a site sluggish or unresponsive while the crawl is happening.

Lessons for Web Owners

For online businesses and publishers, the update is a reminder to think about how URLs are structured. Too many variations can confuse both people and machines.

Tools like canonical tags, robots.txt exclusions, and smart use of filters can help guide bots to the real, valuable content instead of letting them chase every quirky URL they find.

Also read: